How to Create single endpoint to access deployment pod ?

Suppose we have integrated two microservices pod , but If pod has got deleted then pod ip will change.

Here kubeproxy comes into the pictures : It will provide static ip

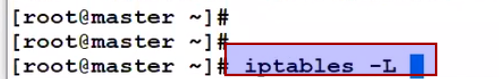

When I say in this deployment I need static ip which I will give to end user. Kubeproxy says I will create a routing tables in OS (IPtables –l ) and I will give you reserve ip address, kubeproxy reserve this ip address

Every deployment we need to make service ip which will be static. That will be endpoint..

Just like haproxy ---> all backend application (kubeproxy also works like that, It will provide single endpoint)

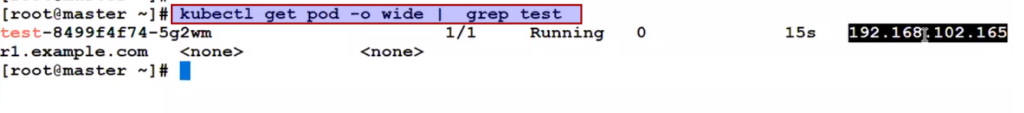

Service Resources : in kubernetes 3 ip range comes into the picture

- Node IP range

- Pod IP range (calico network)

- Service IP range (we can change, but generally not recommended) ---> api server already hold one IP range for kubeproxy(servic ip)

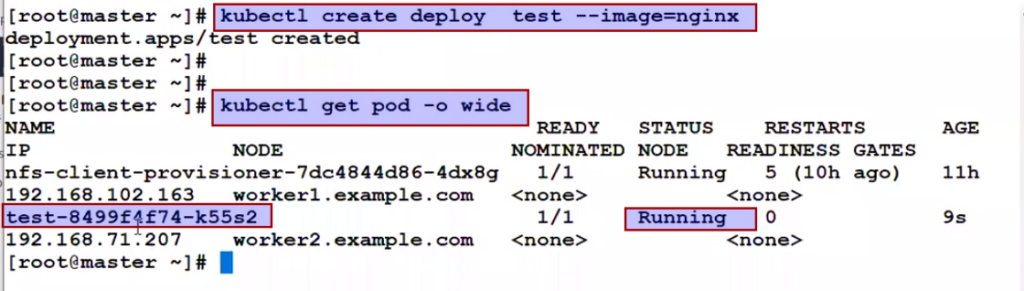

Let see the drawback first:

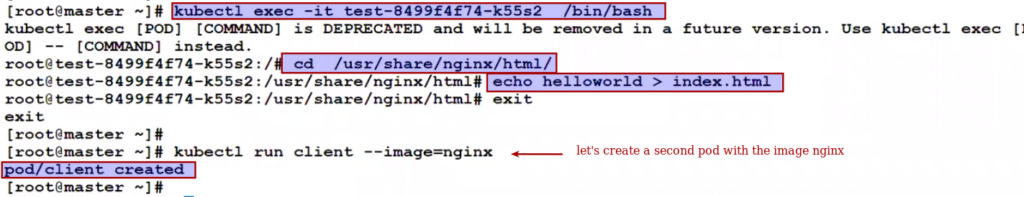

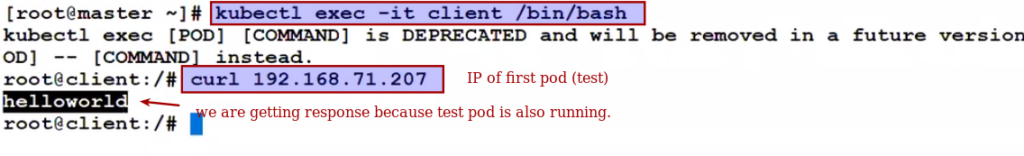

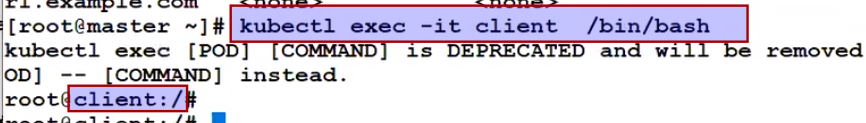

Now go into the pod and hit first pod ip from there:

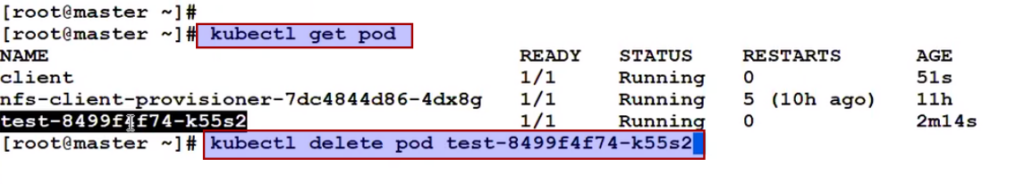

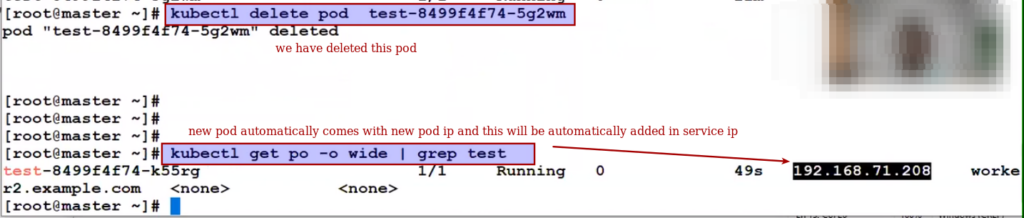

But suppose for some xyz reason test pod got deleted but since deployment is running, new pod will come different ip

So communication will break, we can't hit the curl anymore.

So here the service ip comes into the picture:

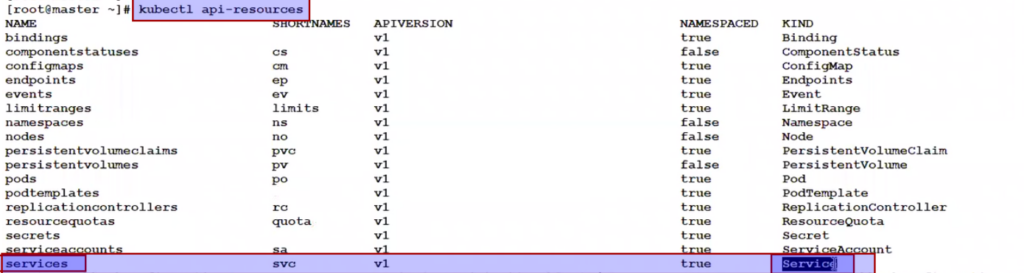

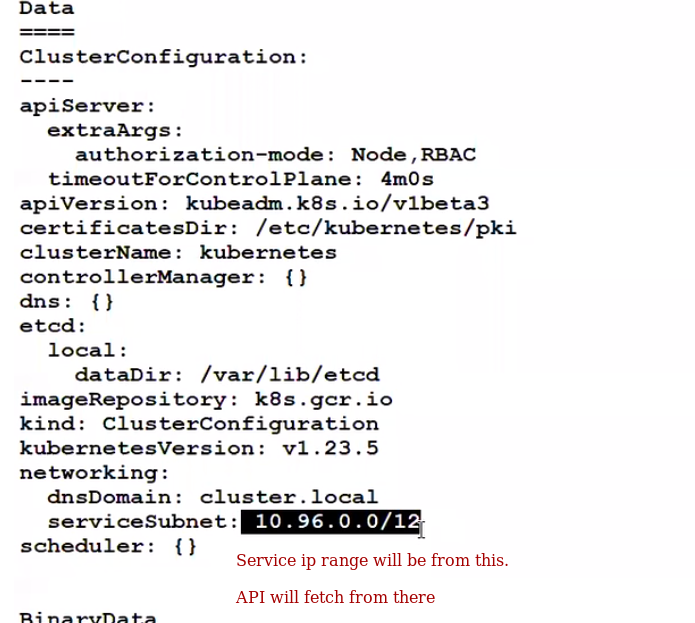

How this service ip come ? What's the pool name ?

Config map object hold service ip range:

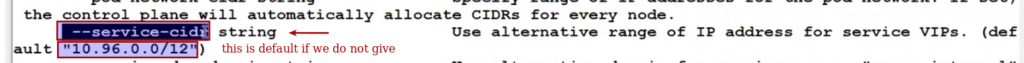

How this comes ? When we do kubeadm init...

Check with kubeadm init –help

How we get service ip ?

We can create service by two method either via command line or file method:

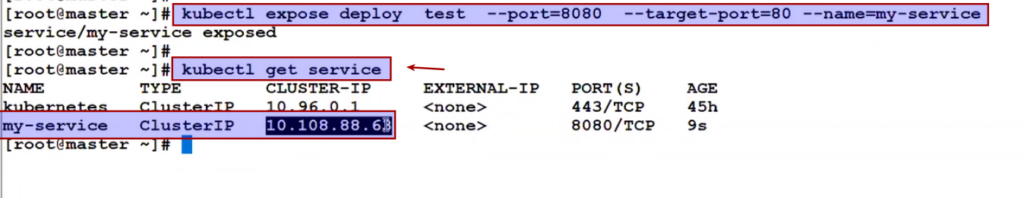

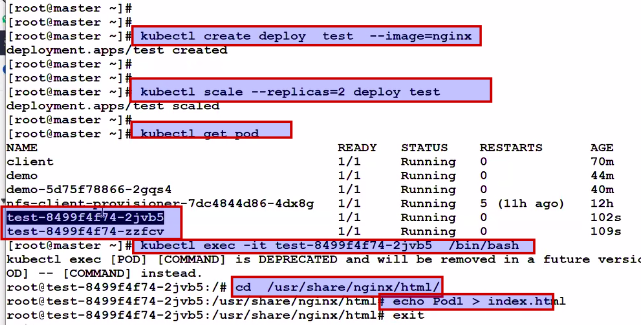

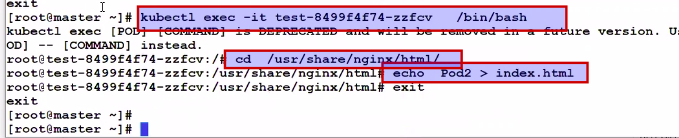

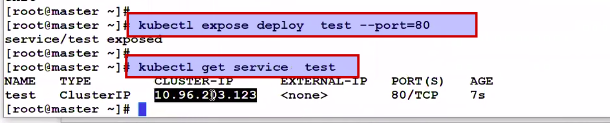

Command line:

Kubeproxy says when you expose the deployment so you need to take the port inside me .I am a proxy server and in proxy server you have to expose your service ip will run on which port ?

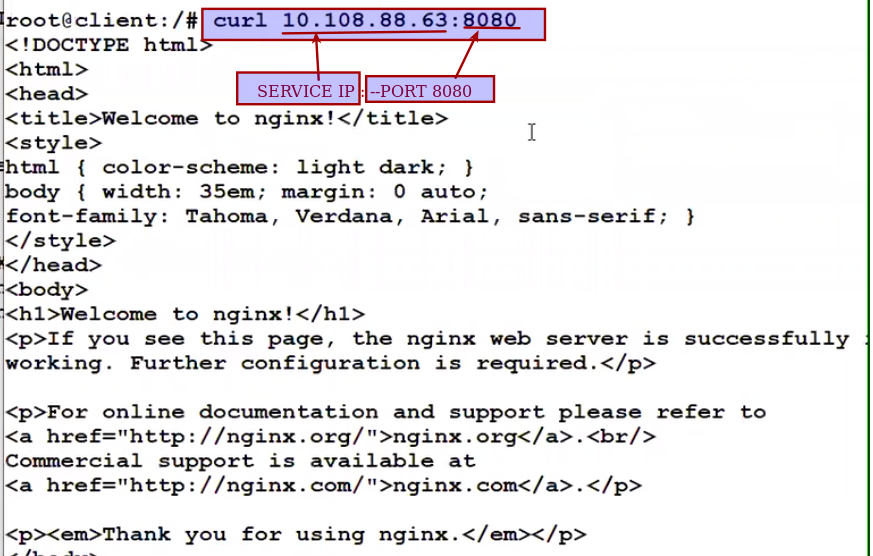

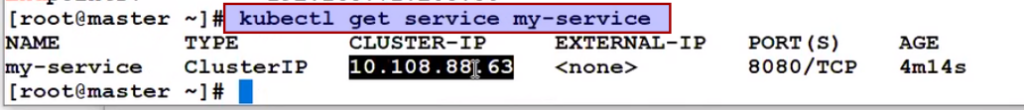

kubectl expose deploy test –port=8080 –target-port=80 --name=my-service (if you do not give the name so by default whatever is your deployment name will be your service name)

--port =8080 this port will be given to enduser (other microservice team I will give this --port=8080), this can be any random port we can take by our choice

--target-port :80 we cannot take it by our choice, it is container port. Means kubeproxy the trafic you will recevice for this deployment you have to transfer it target port.

Here expose part don't be confuse it with docker concept that external world would be able to connect or not. That is different topic

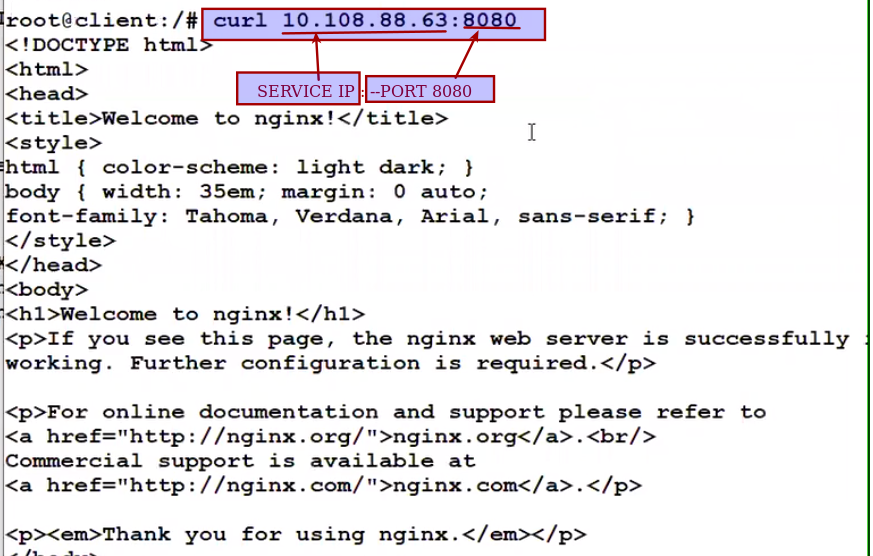

Now to our end user (other micorservice ) we will give this service IP

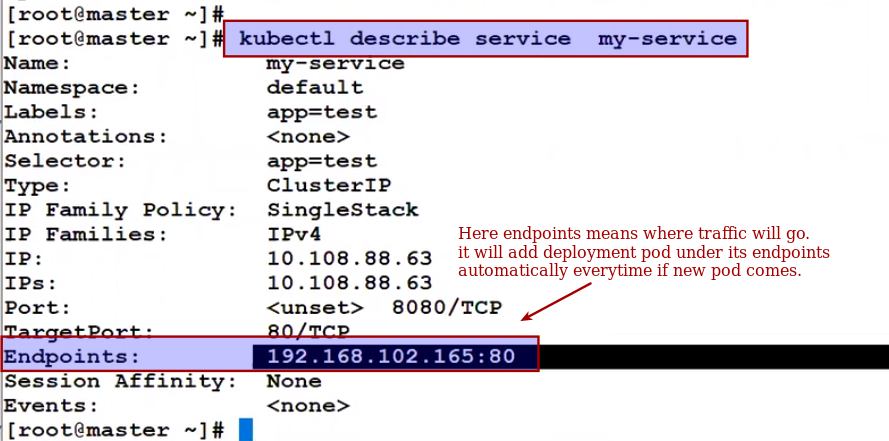

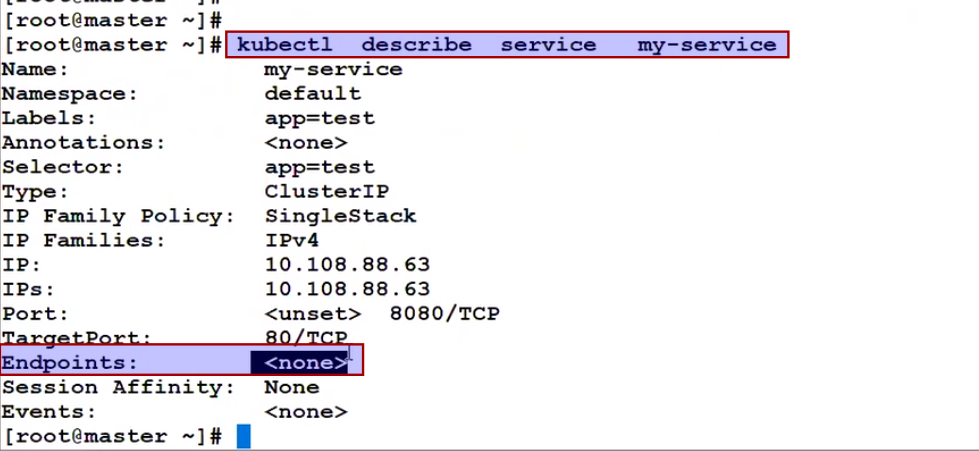

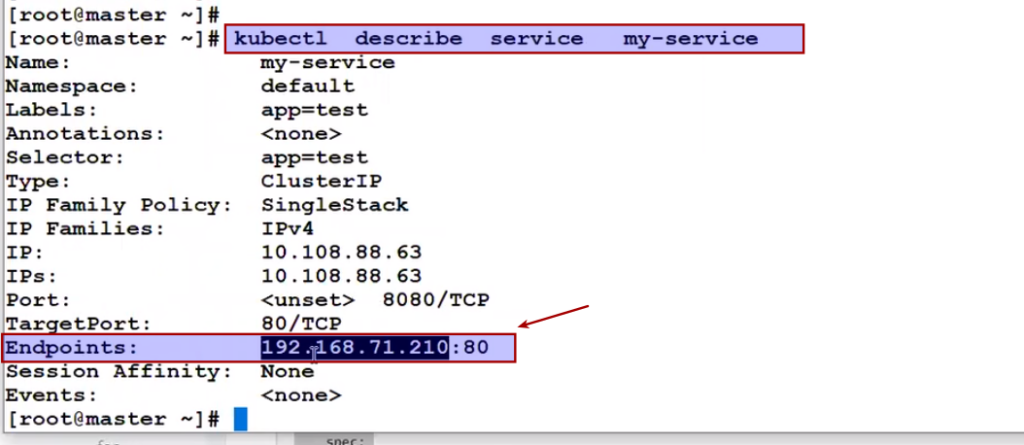

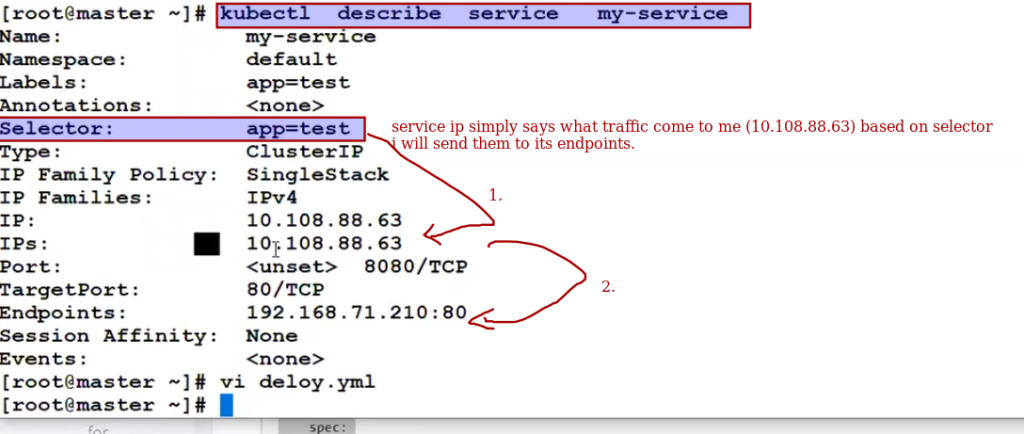

If we describe our service ip :

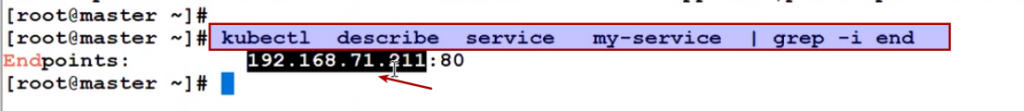

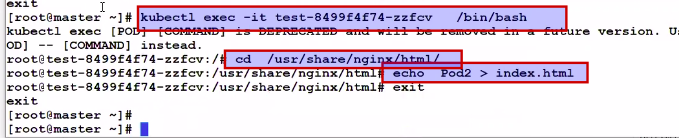

If this pod got deleted.. Then let see what happen ?

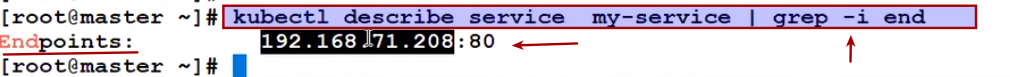

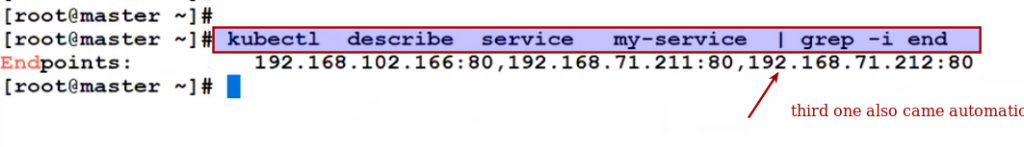

Kubeproxy says I have this intelligency, I will add them automatically.

But still service ip will be same: that is endpoint

We can still hit.

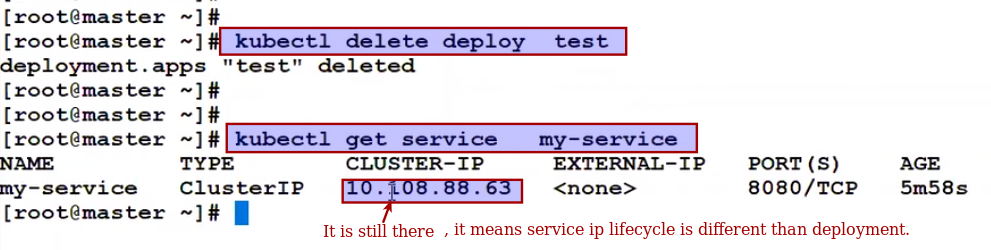

Now suppose I have deleted this deployment

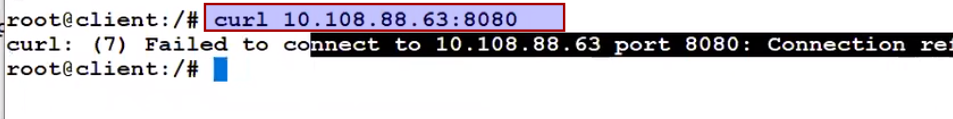

But now question where the traffic will go.. ? Because the endpoint deployment is not there.

It won't go anywhere..

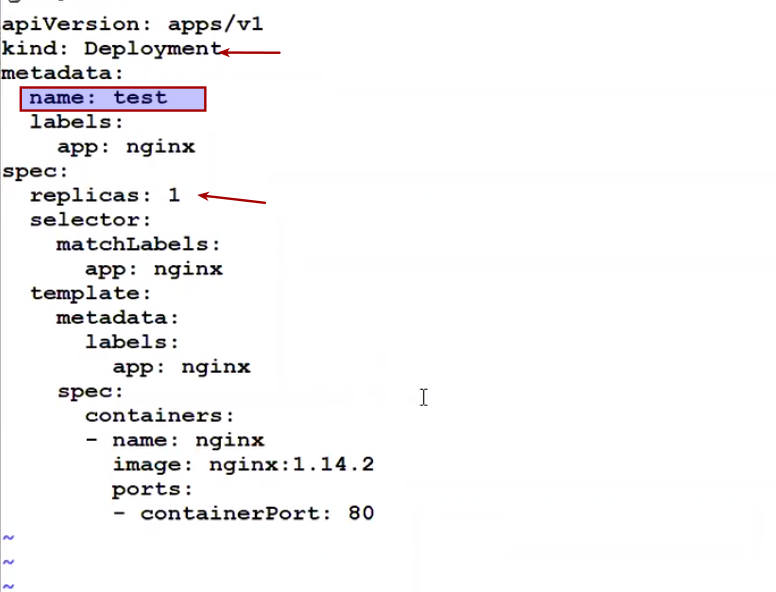

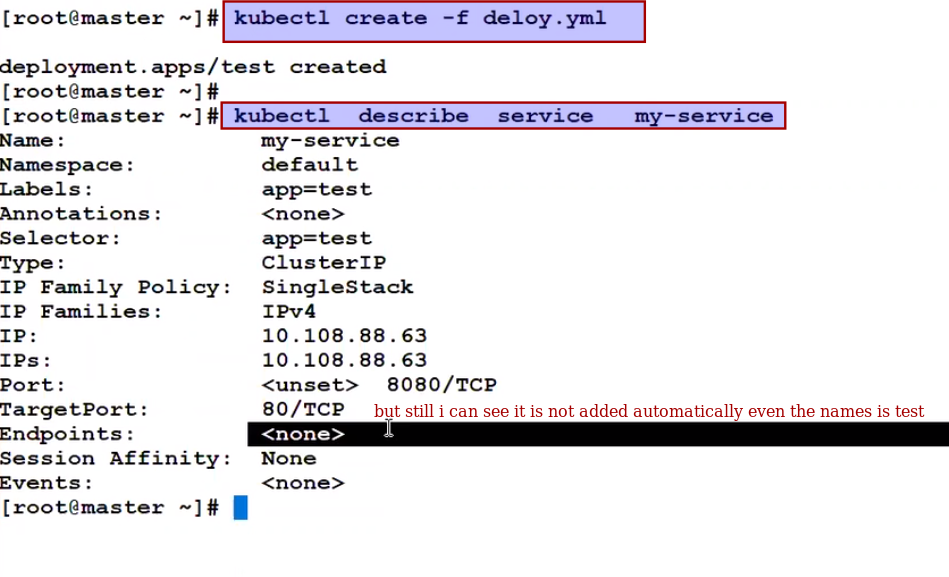

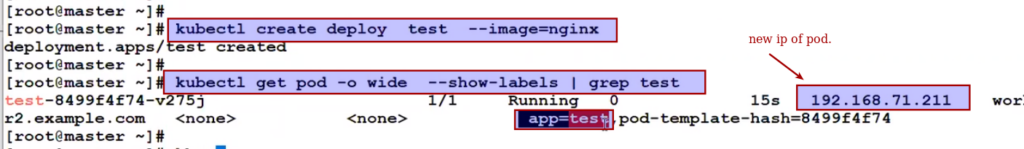

Now question arise suppose again if I create deployment named test so will the service ip will take it automatically ??

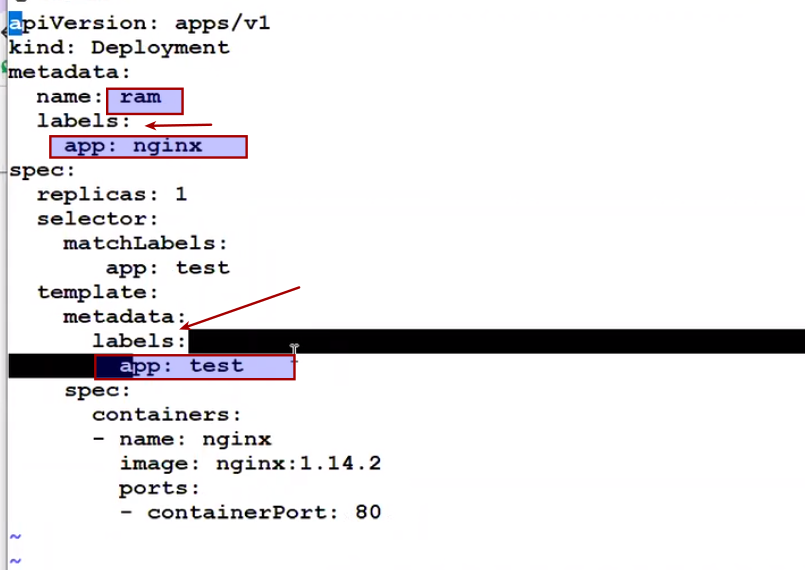

It means it verifies even if the name match service ip will not pick that so how the service ip claims this is my deployment ?

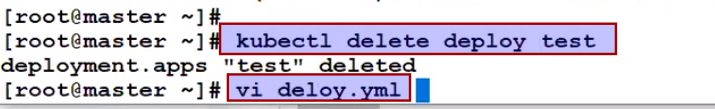

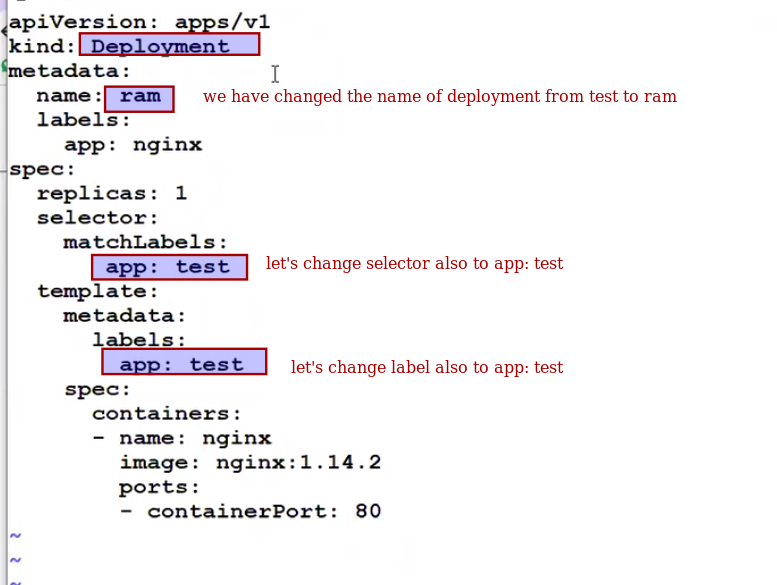

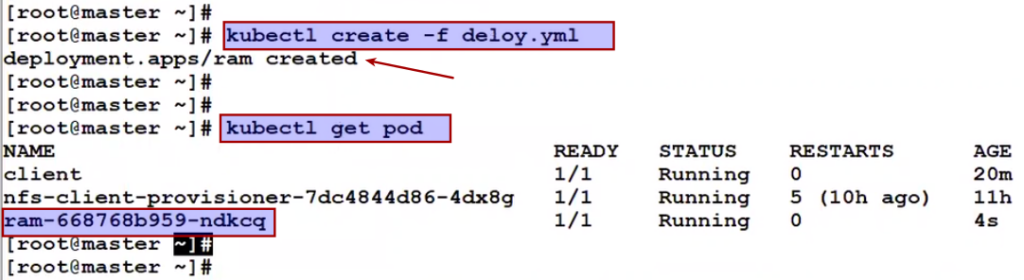

So let's delete this deployment named test and create with another name

But this time I can service ip pick up automatically this deployment named ram .

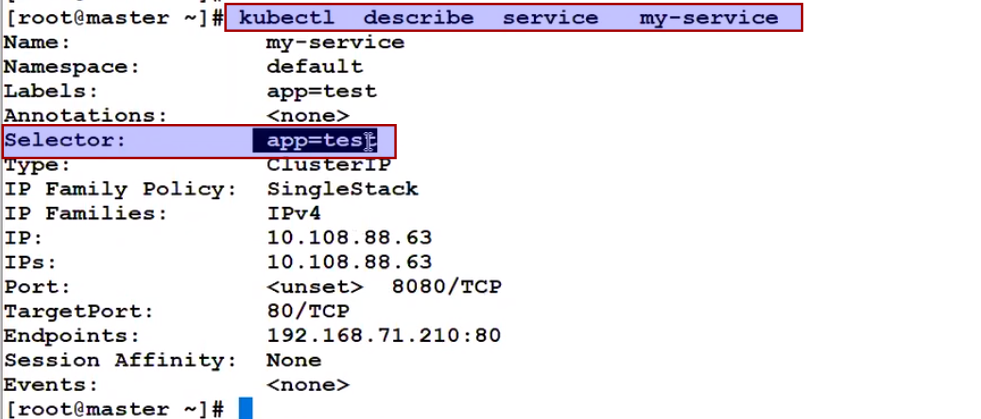

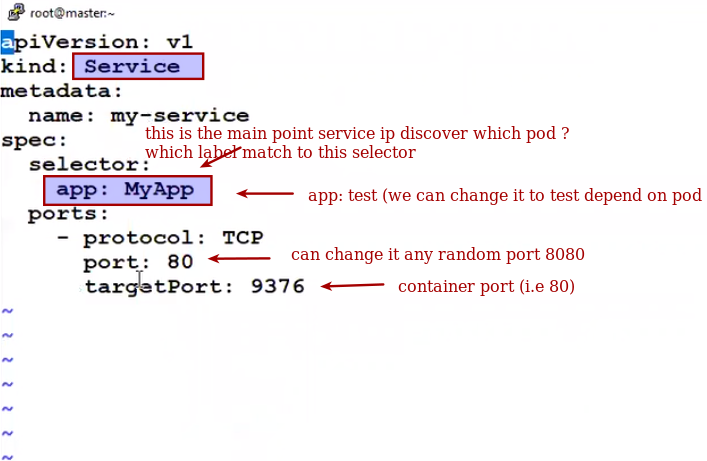

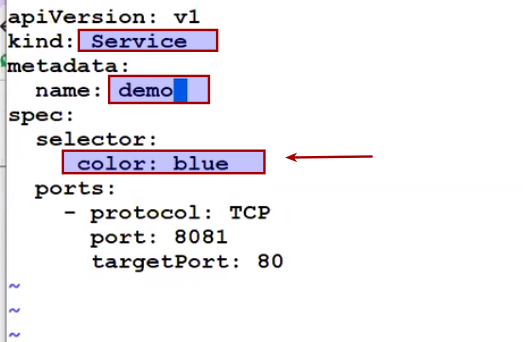

But how answer is based on the selector method.

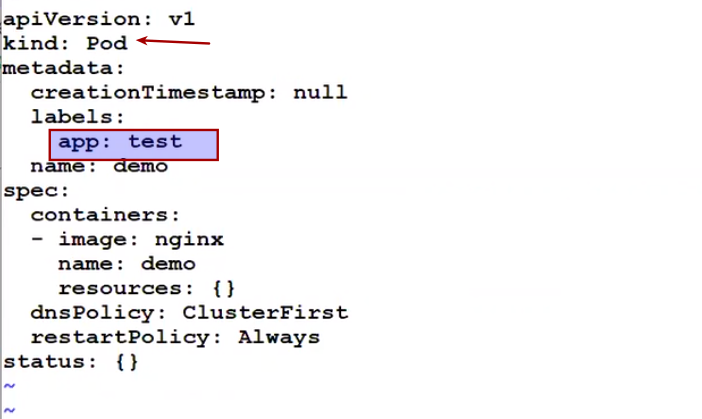

Conclusion : Service IP never discover deployment based on name , service ip works on selector concept. It says i will send the traffic to pod which pod label match with my (service ) selector

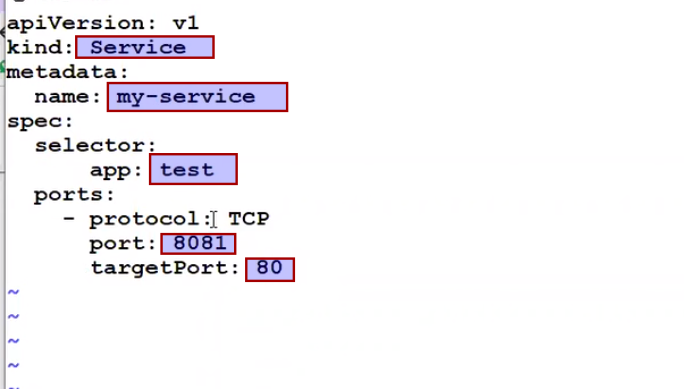

That's why we have changed the deployment label.. To app: test

But how this app:test comes into service name ?

When we run the command kubectl create deployment test so app name key created automatically and test (name of deployment) becomes it's value

See

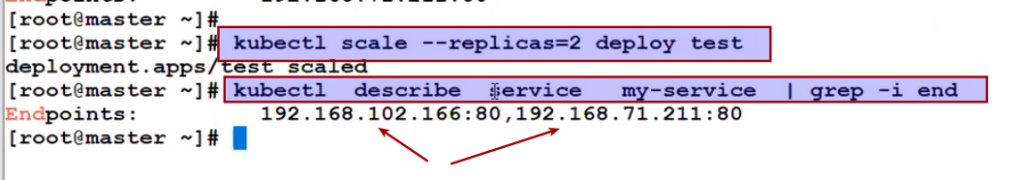

Suppose tomorrow if we scale this deployment then all other pod ip will also get added automatically , so new pod will also be having same label app: test

That's why pod label is mandatory otherwise service ip can't talk.

It also doesn't matter if the pod is orphan ( means don't have any RS or deployment ) even still it's label match I will add them under my endpoints.

File method :

https://kubernetes.io/docs/concepts/services-networking/service/

Kubectl create –f service.yml

App name in selector is not we define.. Whenever we create any deployment key (app): value (name of the deployment) got created.

Every deployment will be having service ip. If deployment is having label color: blue then service ip selector should be same.

In one namespace can be having mutilple service ip

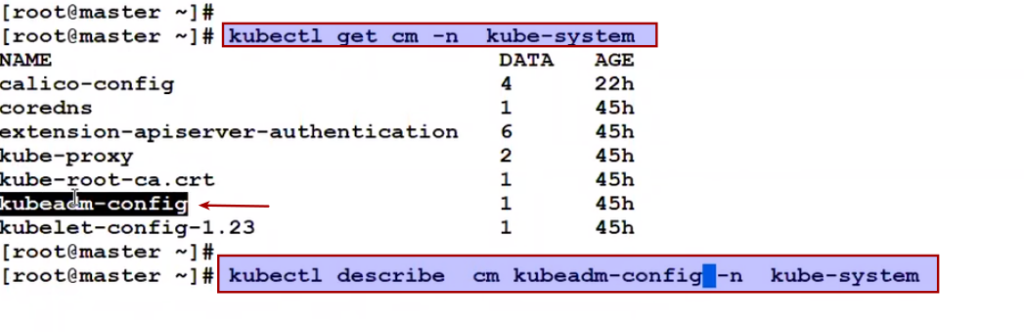

Where this service ip maintain in backend ?

In every node we can see using the command iptables -L

Kubeproxy is just a service but once kubeproxy takes the service ip it makes it routing in iptables -L

######################################################################

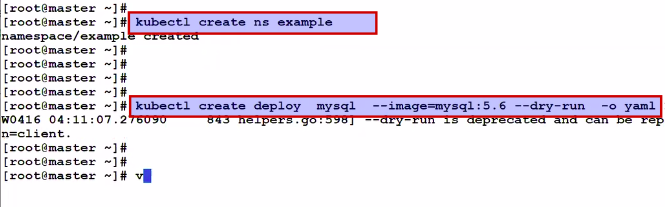

Practical example

######################################################################

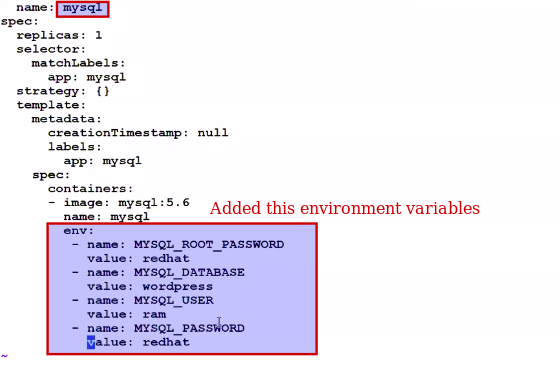

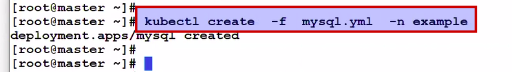

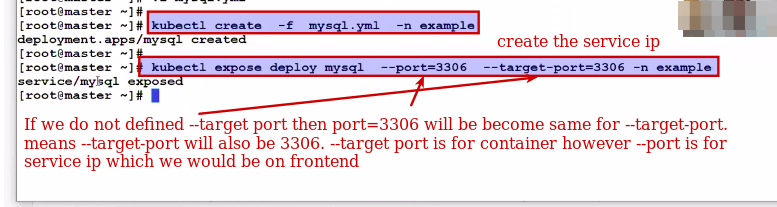

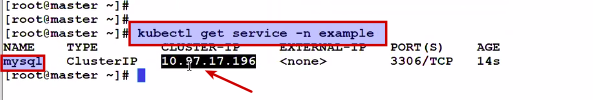

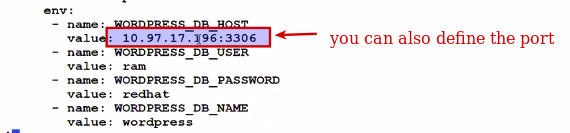

We have two deployment mysql and wordpress, wordpress talk to mysql in backend so we have to make mysql service ip

Whenever someone asks you to provide service ip for mysql , always provide service cluster ip not container ip

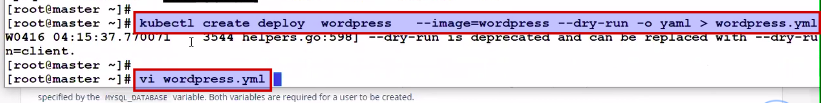

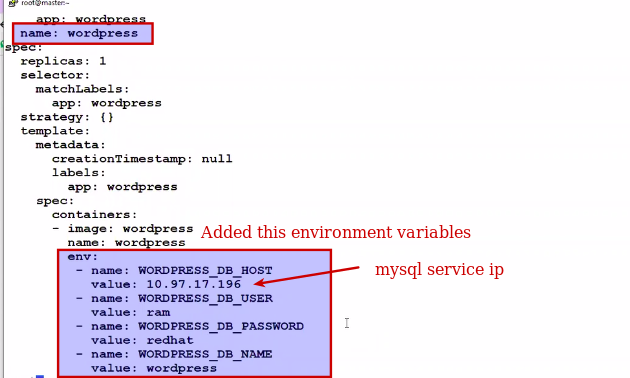

Now create deployment for wordpress:

Now question arise MySQL we have taken the service ip , so will it be service ip for wordpress too ? As of now not needed , we need it we have to expose it externally.

As of now mysql need static..and this was the backend and needed for static ip which is possible by cluster ip

Here we learned how we can integrate two microservices:

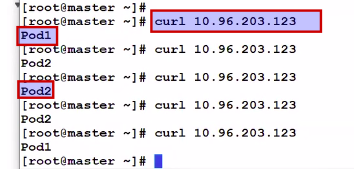

#### load distribution across the pod ########

Service ip also acts as load balancer as this is also a kubeproxy. Pod level load balancer

Policy that used by Service IP : LC (least connection)

Here's the deployment configuration with unnecessary fields removed:

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app.kubernetes.io/component":"exporter","app.kubernetes.io/name":"kube-state-metrics","app.kubernetes.io/version":"2.3.0"},"name":"kube-state-metrics","namespace":"kube-system"},"spec":{"ports":[{"name":"http-metrics","port":8080,"targetPort":"http-metrics"},{"name":"telemetry","port":8081,"targetPort":"telemetry"}],"selector":{"app.kubernetes.io/name":"kube-state-metrics"}}}

creationTimestamp: "2023-01-03T10:24:14Z"

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.3.0

name: kube-state-metrics

namespace: kube-system

resourceVersion: "100977850"

uid: 084f1482-511e-40f5-a270-ab7e67c81d57

spec:

clusterIP: 172.20.166.31

clusterIPs:

- 172.20.166.31

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: http-metrics

- name: telemetry

port: 8081

protocol: TCP

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}